Average Latency Feedback

Overview

API response time is a critical performance indicator and directly impacts user experience. As new features are released, it's crucial to maintain service latency within a defined threshold. This policy monitors the average latency of an API, using it as a feedback mechanism for controlled load ramp.

Configuration

This policy is based on the

Load Ramping with Average Latency Feedback

blueprint. The service is instrumented with the Aperture SDK. A new feature,

awesome-feature, is encapsulated within a control point in the code.

The load_ramp section details the ramping procedure:

awesome_featureis the target for the ramping process.- The ramping begins with 1% of traffic directed to the new feature, gradually increasing to 100% over a period of 300 seconds.

The load ramp is manually initiated by applying the dynamic configuration for this policy, as specified in the dynamic-values tab below.

During the ramping, the average latency of the /checkout API on the

checkout.prod.svc.cluster.local service gets monitored. If the API

endpoint latency remains below 75ms, the awesome-feature ramp proceeds. If the

average latency surpasses 75ms, the policy automatically reverts the ramping

back to the initial 1% threshold.

The below values.yaml file can be generated by following the steps in the

Installation section.

- aperturectl values.yaml

- aperturectl dynamic-values.yaml

# yaml-language-server: $schema=../../../../../../blueprints/load-ramping/base/gen/definitions.json

# Generated values file for load-ramping/base blueprint

# Documentation/Reference for objects and parameters can be found at:

# https://docs.fluxninja.com/reference/blueprints/load-ramping/base

policy:

drivers:

average_latency_drivers:

- criteria:

forward:

threshold: 75

reset:

threshold: 75

selectors:

- control_point: ingress

service: checkout-service.prod.svc.cluster.local

label_matcher:

match_labels:

http.path: /checkout

# List of additional circuit components.

# Type: []aperture.spec.v1.Component

components: []

# The interval between successive evaluations of the Circuit.

# Type: string

evaluation_interval: "10s"

# Identify the service and flows of the feature that needs to be rolled out. And specify load ramp steps.

# Type: aperture.spec.v1.LoadRampParameters

# Required: True

load_ramp:

sampler:

selectors:

- control_point: awesome-feature

service: checkout-service.prod.svc.cluster.local

label_key: ""

steps:

- duration: "0s"

target_accept_percentage: 1.0

- duration: "300s"

target_accept_percentage: 100.0

# Name of the policy.

# Type: string

# Required: True

policy_name: "load-ramping"

# List of additional resources.

# Type: aperture.spec.v1.Resources

resources:

flow_control:

classifiers: []

# Whether to start the ramping. This setting may be overridden at runtime via dynamic configuration.

# Type: bool

start: false

# yaml-language-server: $schema=../../../../../../blueprints/load-ramping/base/gen/dynamic-config-definitions.json

# Generated values file for load-ramping/base/average-latency blueprint

# Documentation/Reference for objects and parameters can be found at:

# https://docs.fluxninja.com/reference/policies/bundled-blueprints/policies/load-ramping/base

# Start load ramp. This setting can be updated at runtime without shutting down the policy. The load ramp gets paused if this flag is set to false in the middle of a load ramp.

# Type: bool

start: true

# Reset load ramp to the first step. This setting can be updated at the runtime without shutting down the policy.

# Type: bool

reset: false

pass_through_label_values: []

Generated Policy

apiVersion: fluxninja.com/v1alpha1

kind: Policy

metadata:

labels:

fluxninja.com/validate: "true"

name: load-ramping

spec:

circuit:

components:

- query:

promql:

evaluation_interval: 10s

out_ports:

output:

signal_name: AVERAGE_LATENCY_0

query_string:

sum(increase(flux_meter_sum{flow_status="OK", flux_meter_name="feature-rollout/average_latency/0",

policy_name="feature-rollout"}[30s]))/sum(increase(flux_meter_count{flow_status="OK",

flux_meter_name="feature-rollout/average_latency/0", policy_name="feature-rollout"}[30s]))

- decider:

in_ports:

lhs:

signal_name: AVERAGE_LATENCY_0

rhs:

constant_signal:

value: 75

operator: lt

out_ports:

output:

signal_name: FORWARD_0

- decider:

in_ports:

lhs:

signal_name: AVERAGE_LATENCY_0

rhs:

constant_signal:

value: 75

operator: gt

out_ports:

output:

signal_name: RESET_0

- bool_variable:

config_key: rollout

constant_output: false

out_ports:

output:

signal_name: USER_ROLLOUT_CONTROL

- bool_variable:

config_key: reset

constant_output: false

out_ports:

output:

signal_name: USER_RESET_CONTROL

- or:

in_ports:

inputs: []

out_ports:

output:

signal_name: BACKWARD_INTENT

- or:

in_ports:

inputs:

- signal_name: RESET_0

- signal_name: USER_RESET_CONTROL

out_ports:

output:

signal_name: RESET

- or:

in_ports:

inputs:

- signal_name: FORWARD_0

out_ports:

output:

signal_name: FORWARD_INTENT

- inverter:

in_ports:

input:

signal_name: BACKWARD_INTENT

out_ports:

output:

signal_name: INVERTED_BACKWARD_INTENT

- first_valid:

in_ports:

inputs:

- signal_name: INVERTED_BACKWARD_INTENT

- constant_signal:

value: 1

out_ports:

output:

signal_name: NOT_BACKWARD

- inverter:

in_ports:

input:

signal_name: RESET

out_ports:

output:

signal_name: INVERTED_RESET

- first_valid:

in_ports:

inputs:

- signal_name: INVERTED_RESET

- constant_signal:

value: 1

out_ports:

output:

signal_name: NOT_RESET

- and:

in_ports:

inputs:

- signal_name: NOT_BACKWARD

- signal_name: NOT_RESET

- signal_name: USER_ROLLOUT_CONTROL

- signal_name: FORWARD_INTENT

out_ports:

output:

signal_name: FORWARD

- and:

in_ports:

inputs:

- signal_name: BACKWARD_INTENT

- signal_name: NOT_RESET

out_ports:

output:

signal_name: BACKWARD

- flow_control:

load_ramp:

in_ports:

backward:

signal_name: BACKWARD

forward:

signal_name: FORWARD

reset:

signal_name: RESET

parameters:

sampler:

label_key: ""

selectors:

- control_point: awesome-feature

service: checkout-service.prod.svc.cluster.local

steps:

- duration: 0s

target_accept_percentage: 1

- duration: 300s

target_accept_percentage: 100

pass_through_label_values_config_key: pass_through_label_values

evaluation_interval: 10s

resources:

flow_control:

classifiers: []

flux_meters:

feature-rollout/average_latency/0:

selectors:

- control_point: ingress

label_matcher:

match_labels:

http.path: /checkout

service: checkout-service.prod.svc.cluster.local

Installation

Generate a values file specific to the policy. This can be achieved using the command provided below.

aperturectl blueprints values --name=load-ramping/base --version=main --output-file=values.yaml

Adjust the values to match the application requirements. Use the following command to generate the policy.

aperturectl blueprints generate --name=load-ramping/base --values-file=values.yaml --output-dir=policy-gen --version=main

Apply the policy using the aperturectl CLI or kubectl.

- aperturectl

- kubectl

Pass the --kube flag with aperturectl to directly apply the generated policy

on a Kubernetes cluster in the namespace where the Aperture Controller is

installed.

aperturectl apply policy --file=policy-gen/policies/load-ramping.yaml --kube

Apply the policy YAML generated (Kubernetes Custom Resource) using the above

example with kubectl.

kubectl apply -f policy-gen/configuration/load-ramping-cr.yaml -n aperture-controller

Start Rollout

Percentage rollout is initiated with dynamic configuration of the policy. Dynamic values facilitate run-time modifications, without shutting down the policy.

In the generated dynamic values file, set the start parameter as true. Use

the command below to generate the file.

aperturectl blueprints dynamic-values --name=load-ramping/base --version=main --output-file=dynamic-values.yaml

Use the following command to apply the dynamic configuration with aperturectl

or kubectl.

- aperturectl

- kubectl

aperturectl apply dynamic-config --policy=load-ramping --file=dynamic-values.yaml

kubectl apply -f dynamic-values.yaml -n aperture-controller

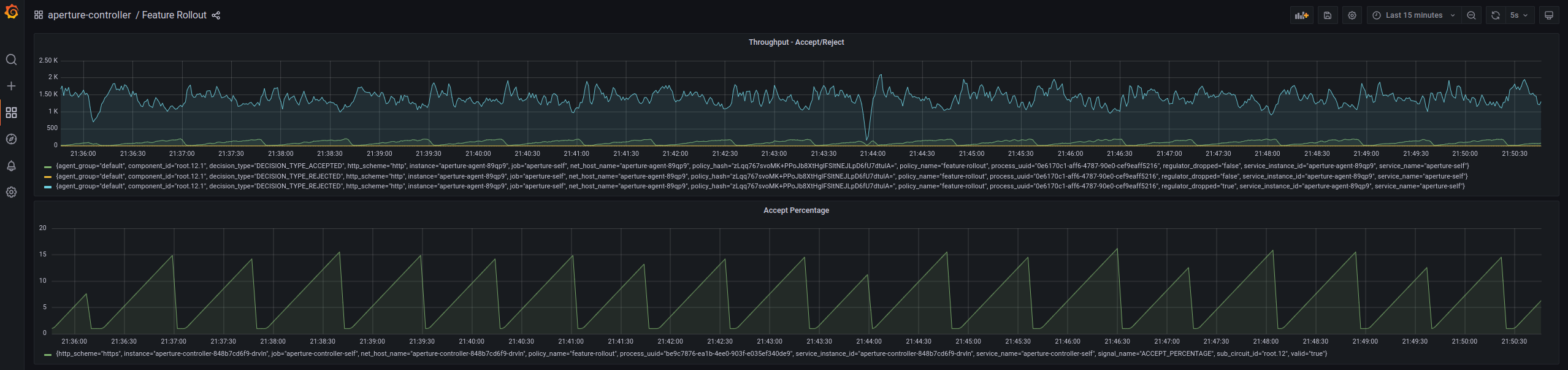

Policy in Action

In this scenario, the new awesome-feature causes a performance regression in

the service, leading to increased response times. As the ramping percentage

increases, the latency threshold of 75ms is exceeded, prompting the policy to

automatically revert the ramping back to the initial 1% threshold. This action

results in the return of the latency to normal levels.

Circuit Diagram for this policy.